Title: Qualitatively characterizing neural network optimization problems

Authors: Ian J. Goodfellow, Oriol Vinyals, Andrew M. Saxe

Link: https://arxiv.org/abs/1412.6544

Quick Summary:

The main goal of the paper is to introduce a simple way to look at the trajectory of the weights optimization. They also mention that it might be possible that some NN are difficult to train due to the effect of their complex structures in the cost function or the noisy introduced in the minibatches because they didn’t find that local minima and saddle points slow down the SGD learning.

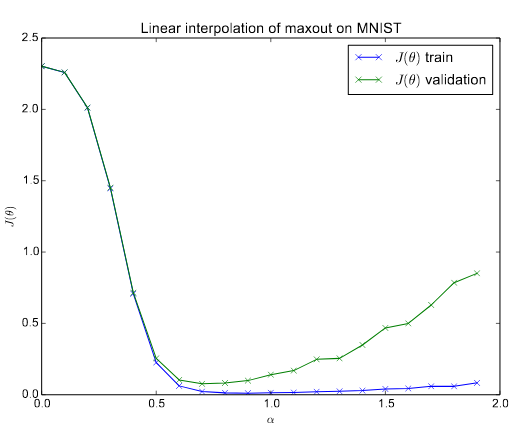

This technique consist in evaluating [latex]J(\theta)[/latex] (the cost function) where [latex]\theta = (1-\alpha) \theta_0 + \alpha \theta_1[/latex] for different values of [latex]\alpha[/latex]. They set [latex]\theta_0 = \theta_i[/latex] (initial weights) and [latex]\theta_1 = \theta_f[/latex] (weights after training), and we can get a cross-section of the objective function.

We can see thus whether there are some bumps or flats during the training given the training/testing data. They do not mention if they averaged the costs obtained with the training and testing datasets, but I guess so because otherwise they would be significantly different.